Using huge pages in Linux applications

Basic knowledge about virtual memory

The memory in modern computers and operating systems uses paged virtual address space. Paging means that the memory is divided into fixed-size areas.

Address space is the set of addresses that are available to use for a process. However, what exactly means read one byte from address X or write 8 bytes starting from address Y depends. It may involve reading a value stored in RAM, writing to a file in the file system, reading a value from a swap, etc.

The address space seen by a process is an abstraction over RAM, swap, file system, external devices, etc. That’s the reason why we call it virtual. The OS stores all the necessary information needed for choosing the right action on access to virtual memory.

Virtual to physical address translation

One of the most frequent operations in applications involves accessing the stack or heap. Both are stored in the virtual address space in anonymous pages, i.e. pages that are not backed by any file in the file system. They reside in RAM (unless moved to swap), so accessing some address X on stack or heap means accessing some address Y in physical memory.

The processes solely operate on virtual addresses and are unaware of the underlying physical addresses. To make it work, a translation is required. The operating system stores the mappings required for such translations.

This translation is made on the page level. It means that when virtual page X is mapped into physical page Y, all offsets within these pages are identical. Less formally, the whole virtual page is mapped into one physical page. The conclusion is when the CPU knows the translation of any address located in a virtual memory page, it knows the translations of all the addresses that belong to the same virtual page.

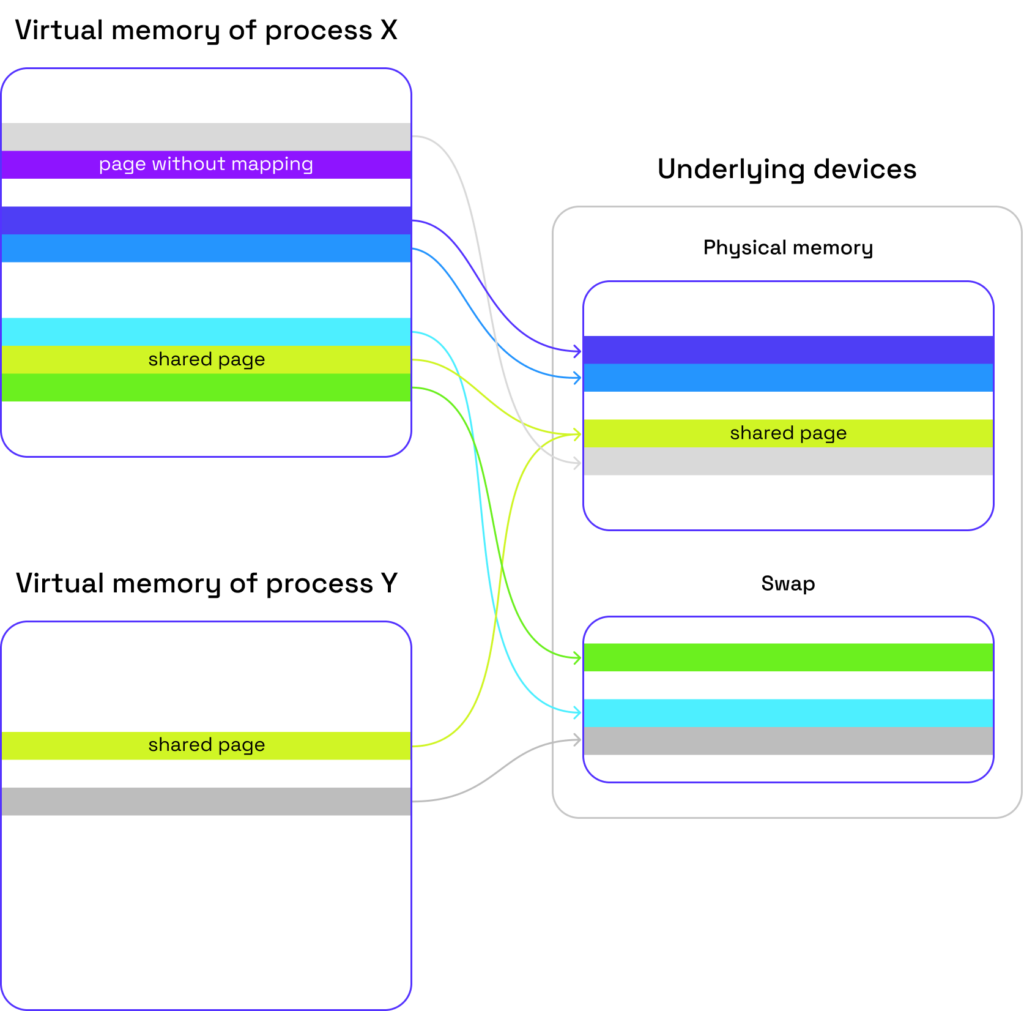

Let’s look at the image. It is greatly simplified:

- all pages have the same size

- only anonymous virtual pages are used

- the locations of the pages in virtual address spaces doesn’t resemble their real location

- there are only two processes

It should help to grasp the key ideas anyway:

- Every anonymous page in virtual memory is mapped into either exactly one page in physical memory (or swap) or is not mapped at all. The content of a virtual page and its counterpart in physical memory (or in swap) is identical.

- Every process has its own virtual address space. In particular, it is common that the same virtual address in different processes means different physical addresses.

- More than one virtual page may be mapped into the same physical page. If two or more processes have access to the same physical page, this page is shared.

Virtual to physical address translation in memory caches

One may wonder how virtual to physical memory translation affects memory caches (L1-L3). Turns out that the vast majority of contemporary CPUs use caches that need to get the physical address on every memory access. Caches that operate fully on virtual memory are rare.

Intuitively you may imagine that translations between virtual and physical addresses are very common. Moreover, such translations must have very low latency. Otherwise, they will slow down caches, making them useless.

Translation Lookaside Buffer

That’s the point of the story where Translation Lookaside Buffer (TLB) comes into play. TLB is a highly specialized, relatively small cache that stores recent translations of virtual pages into physical pages. On many modern processors, there is more than just one level of TLB. Moreover, L1 is usually divided into separate parts for instructions and data.

TLB miss

When TLB doesn’t contain the page we’re looking for, it’s a so-called TLB miss. In such case, the processor looks for the mapping in structures stored in physical memory. Such a lookup requires a few reads from RAM. As you may imagine, this process is very slow when compared to a read from the TLB cache. Frequent TLB misses may hurt performance significantly.

Page fault

What happens if the page we are looking for is not present in the structures stored in RAM? Is it an error?

The answer is: not always. That’s the moment when the OS must take over the control. The access may be simply improper (that would generate the infamous segmentation fault signal). There are, however, some scenarios when the access is perfectly valid. For example, the page may have been moved to swap in the meantime, or the page may not exist because it is mapped to a file that may be opened and read if necessary.

Handling page faults is really slow, so we don’t want to see them often. That’s the reason why we have TLB and translation structures in RAM. The fewer interventions in virtual memory handling from the OS, the better.

Huge pages

The memory is divided into fixed-size areas, both virtual and physical. The most widespread page size is 4 KB. However, often it is possible to use bigger pages. They are called huge pages. Supported page sizes are limited both by the hardware and kernel used. Usually, on x86-64 machines, available sizes are 4 KB, 2 MB, and 1 GB. Operating systems supporting huge pages allow pages of different sizes to co-exist at the same time.

What is the profit of using huge pages?

When using huge pages, it is very likely that you will use significantly less memory pages because you may put more data on a single page. If you’ll use less memory pages, the number of distinct translations between virtual and physical pages will drop. That, in general, decreases the number of TLB misses.

Whether it truly happens depends on memory access patterns in your application and TLB implementation details. For example, in most processors, TLB’s capacity for huge pages is smaller than for standard, 4 KB pages. However, it is compensated by the difference in size between a huge page and a standard one, so the total size of virtual memory covered by efficient TLB translations should be higher for huge pages.

On x86-64 architecture, even if a TLB miss happens, handling it will be less costly when huge pages are used. The translations from virtual pages into physical ones are stored in multi-level structure in RAM. This structure for translations of 4 KB pages has one more level than for 2 MB pages. Similarly, translations of 2 MB pages use one more level than translations of 1 GB pages. In effect, one or two RAM accesses less are required on every TLB miss.

Huge page support mechanisms in Linux

There are two ways of acquiring huge pages in Linux:

- HugeTLB

- Transparent HugePage (THP).

Both have their pros and cons, and neither of them is a clearly better solution. The transparent HugePage mechanism will be described in part 2. In this post, I focus on HugeTLB only.

HugeTLB

Huge pages pools

In HugeTLB there is one fundamental design assumption: huge pages are stored in pools. There exists exactly one pool for every huge page size supported by the kernel and CPU.

The sizes of the pools may be altered by changing these system-wide values (example for a 2 MB pages, root privileges required):

/sys/kernel/mm/hugepages/hugepages-2048kB/nr_hugepages

/sys/kernel/mm/hugepages/hugepages-2048kB/nr_overcommit_hugepages

Alternatively, nr_hugepages may be set via an appropriate boot parameter (look here: HugeTLB Pages — The Linux Kernel documentation for more details).

The pool contains nr_hugepages, but it is allowed to grow up to nr_hugepages + nr_overcommit_hugepages. You can put an arbitrarily high number in nr_overcommit_hugepages. By setting a big enough value, it will act as no limit.

⚠️ nr_hugepages may contain lower number than the value written by the user if the kernel wasn’t able to allocate as many pages as requested. There is no explicit error info in such case.🚫 For 1 GB pages nr_overcommit_hugepages is equal to 0 and can’t be changed (at least on Linux kernel v. 6.4 and older). Sadly, this fact is not documented.When trying to obtain huge pages in a process, in two cases, it will succeed:

- There are enough free, non-reserved huge pages of requested size in the pool available.

nr_hugepages + nr_overcommit_hugepageslimit won’t be exceeded after the reservation, and creating necessary additional huge pages succeeds.

There are more caveats. Huge pages of a given size are neither available to use for huge page allocations of different sizes nor for allocations that use standard 4 KB pages. Moreover, huge pages managed by HugeTLB are available only for the applications that request them explicitly (unless a proper glibc tunable is used, as we’ll see later). In effect, OOM is possible when not enough memory is available outside huge pages pools.

Unfortunately, on Ubuntu (tested on 23.04), by default, huge page pools are empty and not allowed to grow. You need superuser permissions to change the limits.

Using HugeTLB via mmap flag

One possibility is adding an appropriate flag(s) to mmap() call. This is a very low-level mechanism.

Allocating memory

Allocating memory is straightforward:

mmap(nullptr,

length_in_bytes,

PROT_READ | PROT_WRITE,

MAP_PRIVATE | MAP_ANONYMOUS | MAP_HUGETLB,

-1,

0);MAP_HUGETLB parameter does two things:

- Ensures the mapped memory region will use HugeTLB mechanism.

- Ensures the mapped memory region will be aligned to huge page size.

Surprisingly, there is no word about anonymous memory mappings (i.e. not mapped to any existing file) in HugeTLB description in Linux kernel docs. Luckily, it works flawlessly.

Remember that mmap() only reserves memory pages (unless MAP_POPULATE flag is used). The allocation is done lazily. It will happen on the first access to the underlying memory page, not earlier. If multiple pages have been reserved by a single mmap() call, accessing a single page won’t cause allocating other pages.

I strongly discourage using MAP_NORESERVE flag in combination with MAP_HUGETLB. mmap() with MAP_NORESERVE has nasty behavior when there are not enough huge pages available. mmap() call always succeeds, but later, on the first access to the memory page that can’t be allocated SIGBUS signal will arise. This complicates error handling significantly and makes a solution proposed by Paul Khuong on Twitter virtually useless. Don’t worry about swap – in Linux, huge pages cannot be swapped out anyway.

Request huge pages of specific size

You can manually specify the desired page size when calling mmap() by passing one more flag:

#include <linux/mman.h>

...

mmap(nullptr,

length_in_bytes,

PROT_READ | PROT_WRITE,

MAP_PRIVATE | MAP_ANONYMOUS | MAP_HUGETLB | MAP_HUGE_1GB,

-1,

0);This way, you can mix the sizes of huge pages in the same program, for example. There are two predefined flags: MAP_HUGE_1GB and MAP_HUGE_2MB. There is also a possibility to create and use similar flags for other sizes if supported by kernel and CPU. Look at mmap(2) - Linux manual page for more details.

Deallocating memory

Deallocation is simple: just call munmap():

munmap(address, length_in_bytes)Both address and length_in_bytes must be multiplied by page size, so be cautious when returning only part of mapped memory. That’s a bit unusual to unmap only a part of memory mapped via one of the preceding mmap() calls, but it is possible.

Using HugeTLB via glibc tunable

A very interesting alternative to mmap() with HUGETLB flag is using glibc.malloc.hugetlb tunable. It controls if malloc (and realloc) will use huge pages to get memory from the OS, which mechanism will be used (HugeTLB or THP), and what will be the size of used huge pages.

It’s worth mentioning that new operator in C++ uses malloc under the hood. That’s not required by the C++ standard but typically is implemented this way. Also, the default memory allocator in C++ uses new operator. In effect, all the memory allocation requests in the C/C++ application that don’t use non-default allocators will use huge pages if they are available in the pools.

What happens when mmap() with HUGETLB flag called by malloc() fails? Turns out that in such scenario mmap() is called once again without HUGETLB flag. Often this call will succeed if the previous failure was caused by depleting the pool of huge pages. It’s a reasonable behavior, but it degrades performance, so it’s worth to know that it works this way.

The value of this tunable may be set via environment variable, for example:

GLIBC_TUNABLES=glibc.malloc.hugetlb=2 ./executableValue 2 will cause using HugeTLB with the system-wide default size of a huge page. One may specify the desired huge page size instead by providing a value bigger than 2, asking to use Transparent Huge Pages (1), or disabling any additional huge pages support (0).

What is really cool about this tunable is the fact in most cases, we may change it even without access to the source code. It may be set in the code as well. For more details, look here: Memory Allocation Tunables (The GNU C Library)

Memory fragmentation issues

The allocation will always succeed if there are enough free pages in the pool. However, reserving an overcommit page may fail even if nr_overcommit_pages is not exceeded. That may obviously happen when physical memory is depleted. A little less obvious case is when there is not enough contiguous memory in physical memory to allocate a huge page. It’s not very unlikely when mixing huge pages with normal size pages (that’s almost always true). The longer the system works, the higher the memory pressure is the bigger the chance for failure due to memory fragmentation.

It’s kind of a weakness of HugeTLB. In THP, you can decide what should happen when allocation is not possible. For example, you can enforce instant compaction of existing 4 KB pages to reduce fragmentation. In HugeTLB, you will find nothing like that. When it fails, it fails.

HugeTLB statistics

Command cat /proc/meminfo | grep '^Huge' turns out to be handy. Example output:

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

Hugetlb: 0 kB

You may find a description of what these values mean in Linux kernel documentation.

Transparent Huge Page (THP)

THP will be described in a separate blog post soon. Key differences between HugeTLB and THP will be shown – stay tuned! While you wait, we'd like to invite you to try Oxla. You can deploy a single cluster node in just 2 minutes. It's completely free, so go ahead, have some fun, and share your thoughts with us at hello@oxla.com!

References

HugeTLB Pages — The Linux Kernel documentation – detailed explanation. Very useful if you want to gain a deep understanding of the topic.

A. Enabling Hugepages – contains instructions on how to change boot parameters that affect HugeTLB.

mmap(2) - Linux manual page – description of mmap() call.

Memory Allocation Tunables (The GNU C Library) – detailed description of memory allocation tunables in glibc.

Concepts overview — The Linux Kernel documentation – overview of concepts used in memory management. It’s a kind of mandatory knowledge for somebody who wants to learn advanced uses of memory in Linux.